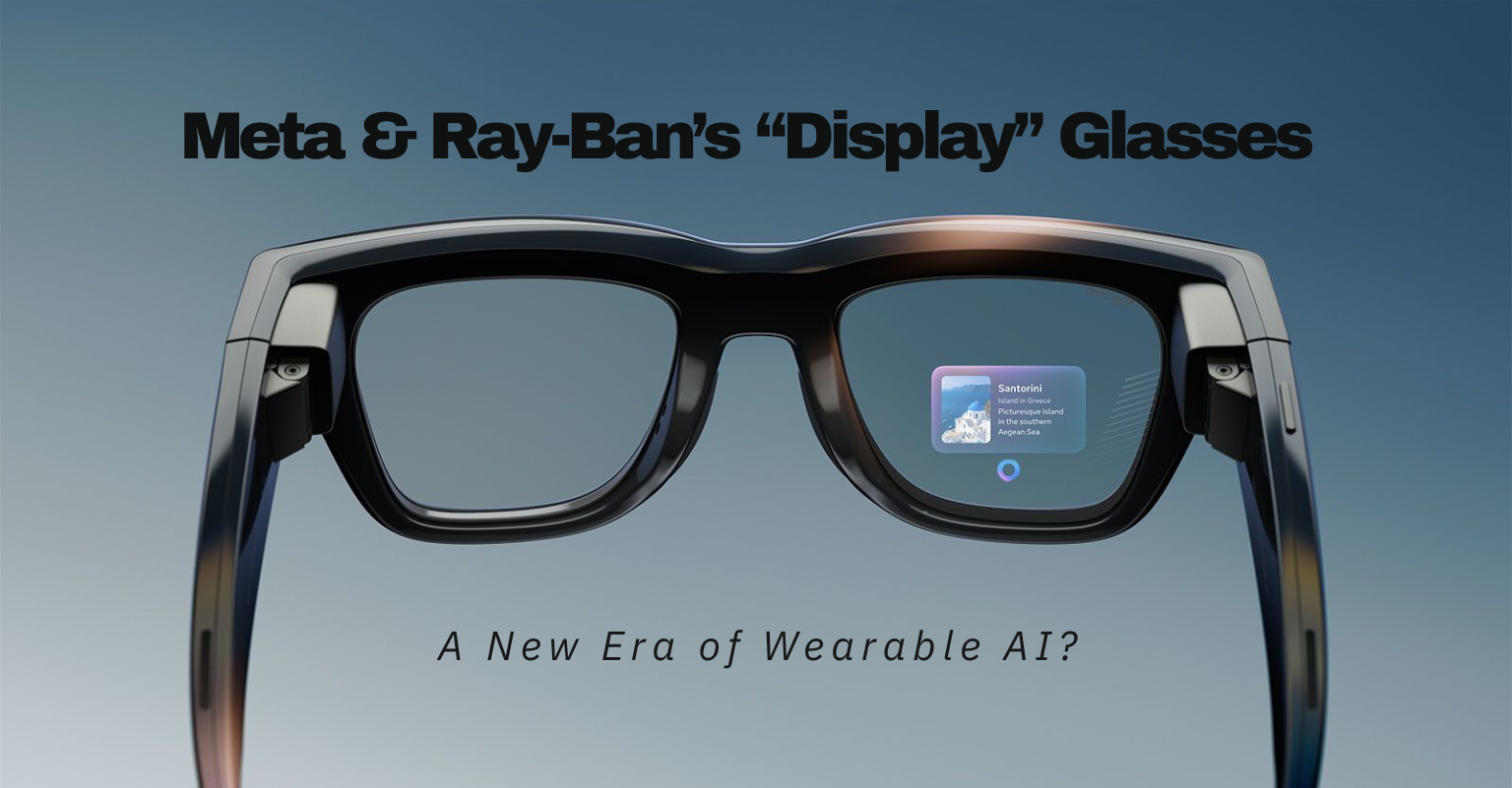

Meta has just unveiled the newest addition to its smart glasses line: the Meta Ray-Ban Display. Announced at Meta Connect 2025, these AI-enhanced glasses include a built-in display and a wristband controller, and mark a significant step forward in wearable tech.

Mark Zuckerberg revealed three new base models at Connect, with the priciest taking an unexpected turn in branding as the ‘Meta Ray-Ban Display Glasses’. Here’s what makes them interesting, what questions remain, and what these might mean for the future of “always-on” AI glasses.

What They Are — Features & Spec Highlights

From the public announcements, leaks, and partner sites, here are the key features:

| Component | What It Offers |

|---|---|

| In-Lens Display | A full-color, high-resolution monocular display (i.e. placed in the right lens). It shows notifications, navigation, viewfinder for the camera, translation, messages, visual responses from AI, etc. |

| Specs of Display | Approx. 600 × 600 pixels, about 20° field of view, refresh/display rates around 90Hz for the display engine, though content updates around 30Hz. Brightness ranges from ~ 30 up to 5,000 nits; there’s UV detection so the display can adjust. |

| Meta Neural Band | A wristband that uses EMG (electromyography) sensors to pick up subtle muscle signals (e.g. small finger motions) for interacting with the glasses: scrolling, clicking, potentially even typing. IPX7 water rating. Battery around 18 hours. |

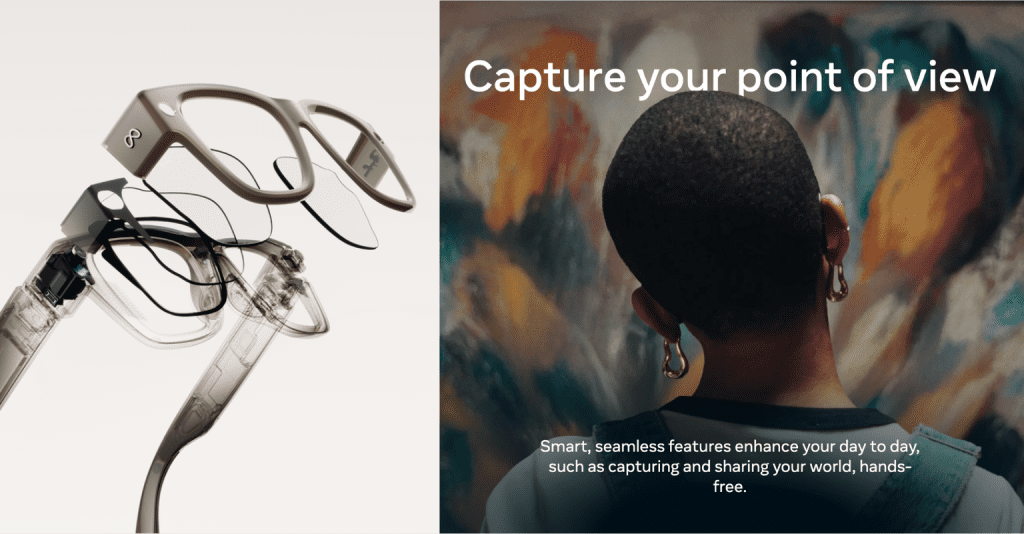

| Camera / Audio / Microphones | 12MP camera, multiple mics (five-mic array), speakers off the ear; audio features like voice control. |

| Battery Life | Mixed-use battery expectancy is about 6 hours for the glasses alone; with a charging case, you can extend usable time substantially (some sources say ~30 hours total with case). |

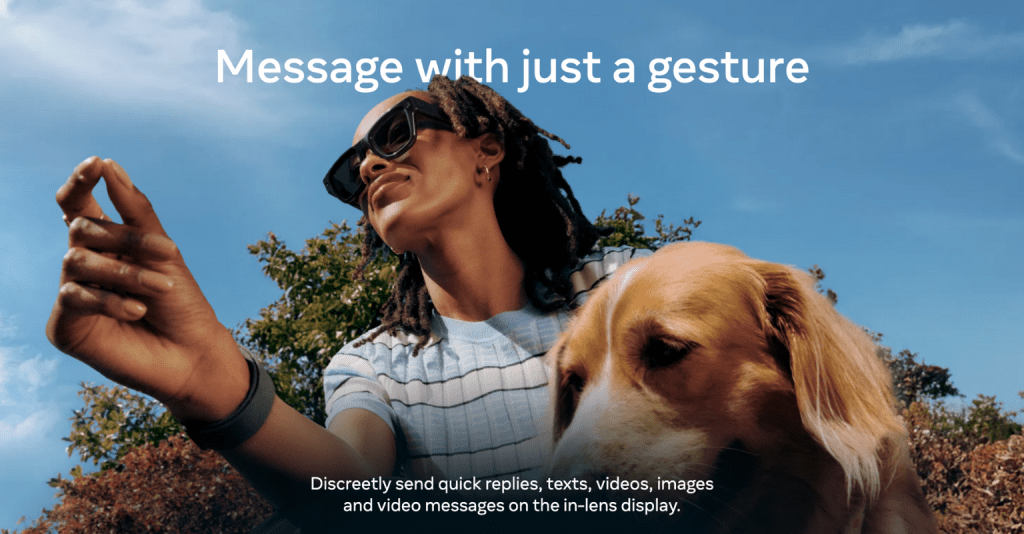

| Other Features | Live captions; visual translation of text and speech; navigation; integration with Meta AI; messaging & video-calls (e.g. over WhatsApp, Messenger etc.); viewfinder preview of what the camera sees before capturing. |

Price & Availability:

Price: US$799 for the glasses + Neural Band package.

Launch date in the U.S.: September 30, 2025. Other markets (Canada, UK, France, Italy, etc.) follow in early 2026.

Sales will initially be in-store only (e.g. Best Buy, Ray-Ban stores, LensCrafters, some Verizon outlets).

What’s New / What’s Different

This product stands out in a few ways compared with prior smart glasses (including previous Ray-Ban Meta / Stories lines etc.):

Integrated Display — previous Ray-Ban smart glasses could take pictures, stream audio, maybe show LEDs etc., but didn’t have a display built into a lens for visual UI content. This is a major move towards AR-like interaction.

Gesture Control via EMG Wristband — using muscle signals rather than just touch, voice, or large gestures gives more discreet and possibly more intuitive control. For short interactions, this could feel more natural.

High Brightness + Outdoor Use — with up to 5,000 nits brightness and UV detection, the display is designed to be usable outdoors under bright light. That’s vital if these are to be practical.

Focus on “Looking Up / Staying Present” — Meta is emphasizing tasks done by glance, small interactions, so the display is off to the side (not blocking your central view), doesn’t stay on all the time, etc. They want it to supplement rather than distract.

What Are the Trade-Offs / Challenges

No product is perfect. Here are some of the trade-offs and questions that remain:

Battery Life vs Use: 6 hours mixed use is solid but not exceptional, especially if you use the display and camera heavily. The need for a charging case helps, but it means you’ll likely still need a high-power backup strategy.

Display Limitations: Monocular (one lens) display means content is only visible to one eye, off to the side. Some tasks won’t feel immersive or may cause slight mismatch / eyestrain. Also, “refresh at 30Hz content” might limit fluid motion visuals.

Weight / Bulk / Design Compromises: Including display, camera, band, etc. tends to make things heavier/thicker. Comfort and aesthetics matter a lot with glasses. There’s going to be a balance between functionality and wearability.

Privacy & Social Acceptability: As with all glasses with cameras and always-on mics, people around you may feel uneasy. Visibility of recording indicator, transparency of what is being recorded, how data is stored, etc. will matter.

App / Ecosystem Support: The usefulness will depend heavily on software: how well Meta AI works, what apps are supported, hands-free navigating, accuracy of translation, etc. Early demos have suggested potential, but also that some features may be rough.

Cost and Access: At US$799, this is a premium device. Also, initial rollout in physical stores only could limit accessibility. For many users, price and convenience will be a barrier.

These glasses are more than just another gadget; they may signal something important about where wearable technology and AI are headed:

From Audio to Visual AI: Previous smart glasses tended to rely on audio and voice commands. Adding a display means richer visual feedback, which can expand what smart glasses can do (maps, navigation, visual AI responses, etc.).

Hands-Free / glanceable interfaces: The goal seems to be enabling micro-interactions without reaching for a phone: glancing, quick replies, translation, navigation, etc. That convenience could change how people interact with tech in daily life.

Incremental AR: Though this isn’t full augmented reality in the sense of spatial overlays or advanced graphics, this is a step toward AR becoming more mainstream and discreet.

New Interaction Paradigms: The Neural Band and EMG control hint at future interfaces beyond touch/voice. This could influence how wearables are controlled going forward.

Raising the Bar for Competitors: Apple, Google, Snap, etc. are all working in this space. Meta pushing more into display + AI + natural controls applies pressure on rivals to keep up.

The Meta Ray-Ban Display glasses are a major step in wearable AI: adding a visual display, gesture control, and richer AI integration moves smart glasses from “novelty” toward “useful tool.” But as with most early-stage tech, real usability will depend heavily on execution: comfort, battery, display clarity, privacy, software polish. If Meta pulls it off well, these could mark the beginnings of a shift in how we interact with tech in everyday life—making glanceable, hands-free AI part of the ambient layer rather than something we take out and hold.

As wearable technology like the Meta Ray-Ban Display glasses continues to push the boundaries of what’s possible, staying informed about the latest tech trends and innovations is more important than ever. At El Brand, we help businesses and enthusiasts alike navigate the evolving landscape of technology, marketing, and brand storytelling—sharing insights, updates, and inspiring stories from the forefront of innovation. Want to keep up with the latest in tech and brand trends? Read more on our blog and never miss an update.